Text2Relight generates the image of a relighted portrait (right) as a condition of a text prompt while keeping original contents in an input image (left).

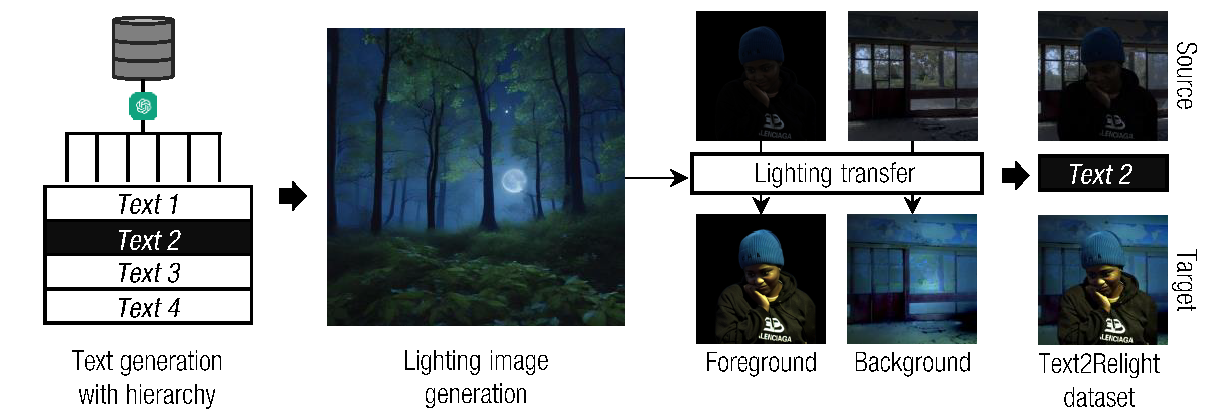

We present a lighting-aware image editing pipeline that, given a portrait image and a text prompt, performs single image relighting. Our model modifies the lighting and color of both the foreground and background to align with the provided text description. The unbounded nature in creativeness of a text allows us to describe the lighting of a scene with any sensory features including temperature, emotion, smell, time, and so on. However, the modeling of such mapping between the unbounded text and lighting is extremely challenging due to the lack of dataset where there exists no scalable data that provides large pairs of text and relighting, and therefore, current text-driven image editing models does not generalize to lighting-specific use cases. We overcome this problem by introducing a novel data synthesis pipeline: First, diverse and creative text prompts that describe the scenes with various lighting are automatically generated under a crafted hierarchy using a large language model (e.g., ChatGPT). A text-guided image generation model creates a lighting image that best matches the text. As a condition of the lighting images, we perform image-based relighting for both foreground and background using a single portrait image or a set of OLAT (One-Light-at-A-Time) images captured from lightstage system. Particularly for the background relighting, we represent the lighting image as a set of point lights and transfer them to other background images. A generative diffusion model learns the synthesized large-scale data with auxiliary task augmentation (e.g., portrait delighting and light positioning) to correlate the latent text and lighting distribution for text-guided portrait relighting. In our experiment, we demonstrate that our model outperforms existing text-guided image generation models, showing high-quality portrait relighting results with a strong generalization to unconstrained scenes. Project page: https://junukcha.github.io/project/text2relight/

Overview of the data synthesis pipeline. We first generate a text prompt with a language hierarchy from which we generate a lighting image. Subsequently, we transfer the lighting from the lighting image to a portrait image captured from either lightstage or real world (with background inpainting). These form the training dataset for our Text2Relight model.

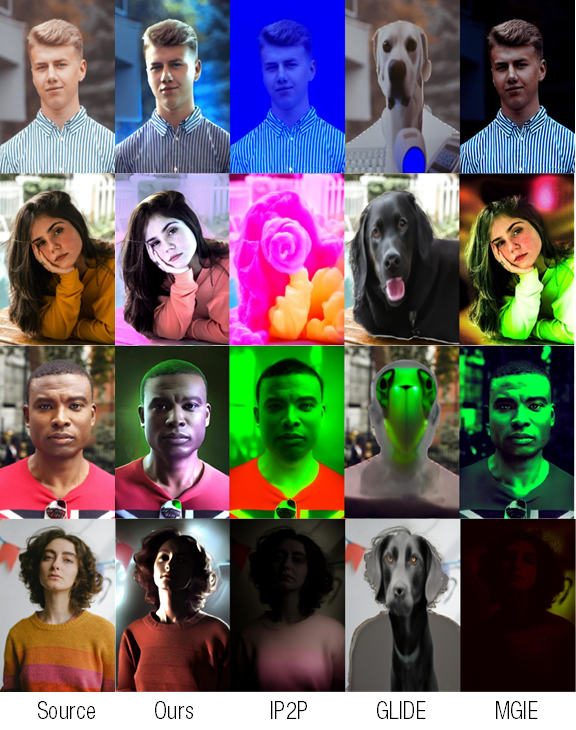

Qualitative comparison with text prompts : 'The blue light of the computer monitor', 'Sweet cotton candy', 'Eerie glow of green fluorescent lighting', and 'The chilling atmosphere of the fear-inducing dark room'. We compare ours with IP2P (Brooks, Holynski, and Efros 2023), GLIDE (Nichol et al. 2021), and MGIE (Fu et al. 2023).