Junuk Cha

About Me

My name is Junuk Cha, and I graduated from the combined MS/PhD program affiliated with the Artificial Intelligence Graduate School (AIGS) at UNIST as part of the UVL Lab, where I am adviced by Prof. Seungryul Baek. I obtained my BS (2021) degree from the Dept. of Mechanical Engineering at UNIST.

Email:

- Official: junukcha@kaist.ac.kr

- Personal: junukcha@gmail.com

Research Interests

- Computer Vision and deep learning.

- 3D human (Recognition, reconstruction and generation)

- AI for Robotics

- Text-guided 2D image editing.

- Audio-driven talking head synthesis.

Experiences

- Postdoc at AMI Lab, KAIST, Daejeon, Republic of Korea (Aug. 2025 - Present)

- Humanoid Motion Generation & Robot Pose Estimation (Under review at CVPR 2026)

- PI: Tae-Hyun Oh

- Postdoc at KETI, Pangyo, Republic of Korea (Mar. 2025 - July 2025)

- Sign Language Fingerspelling Recognition (Under review at CVPR 2026)

- PI: Han-Mu Park

- Research Intern at Inria, Nice, France (Sep. 2024 - Nov. 2024)

- EmoTalkingGaussian: Continuous Emotion‑conditioned Talking Head Synthesis (arxiv 2025)

- Collaborators: Seongro Yoon, Valeriya Strizhkova, Francois Bremond

- Research Scientist/Engineer Intern at Adobe, San Jose, CA, USA (Feb. 2024 - May 2024)

- Text2Relight: Creative Portrait Relighting with Text Guidance (AAAI 2025)

- Collaborators: Jae Shin Yoon, Mengwei Ren, He Zhang, Krishna Kumar Singh, Yannick Hold‑Geoffroy, David Seunghyun Yoon, HyunJoon Jung

- M.S./Ph.D. in Artificial Intelligence, UNIST, South Korea, Mar. 2021 - Feb. 2025

- B.S. in Mechanical Engineering, UNIST, South Korea, Mar. 2015 - Feb. 2021

- Sergeant, Republic of Korea Army, Discharged, Jan. 2018 - Sep. 2019

Publication

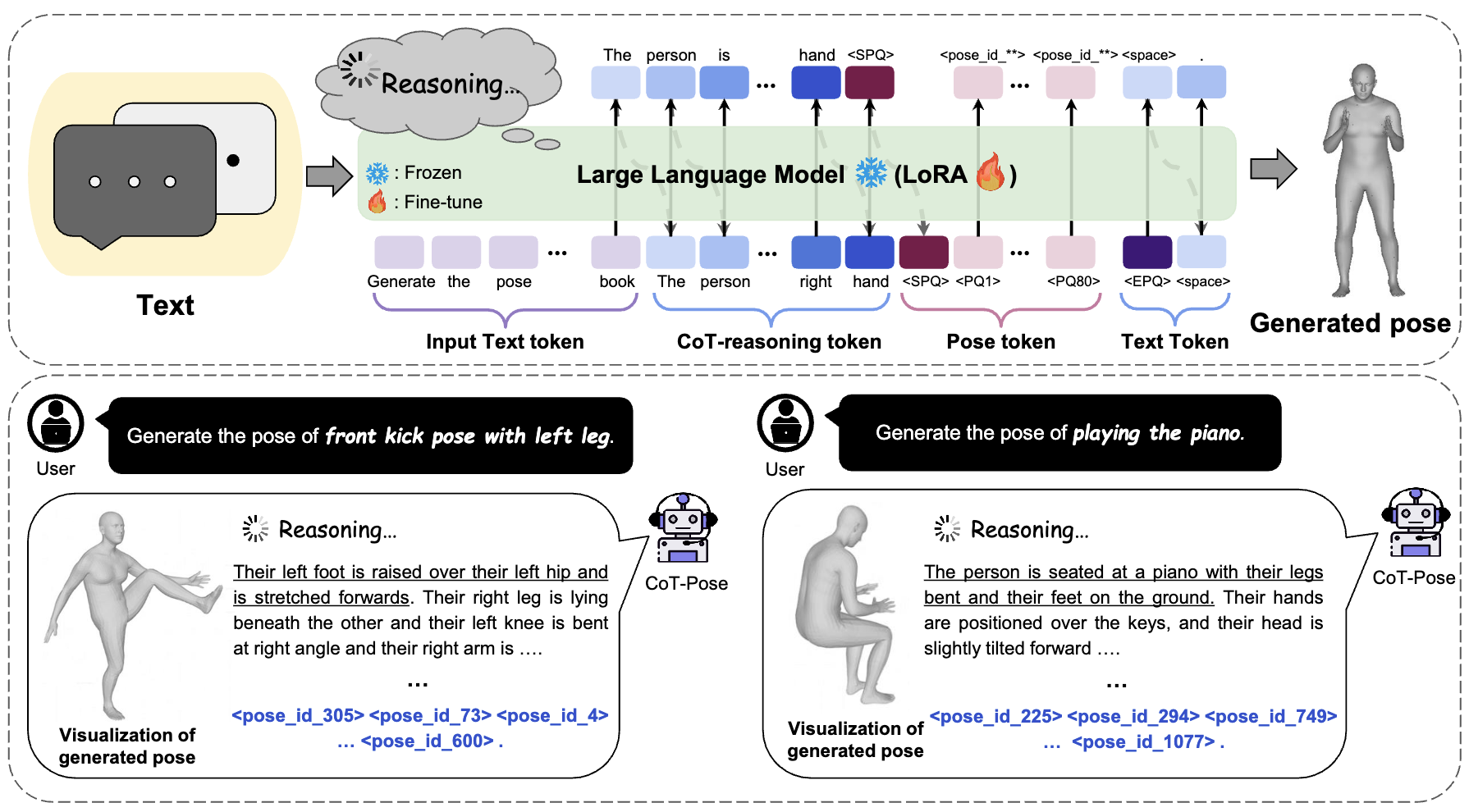

- CoT-Pose: Chain-of-Thought Reasoning for 3D Pose Generation from Abstract Prompts

Junuk Cha*, Jihyeon Kim*.

in Proc. of IEEE Conf. on International Conference on Computer Vision (ICCV) workshop, Honolulu, USA, 2025.

Equal contribution*.

[Paper (arxiv)] [Code]

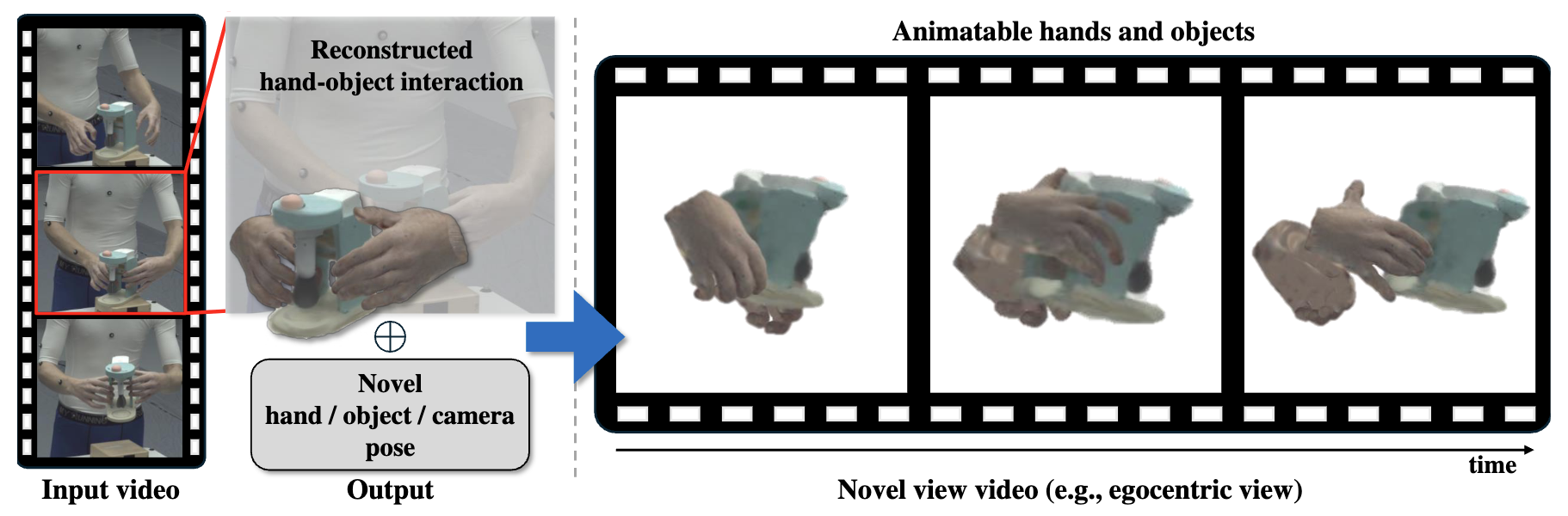

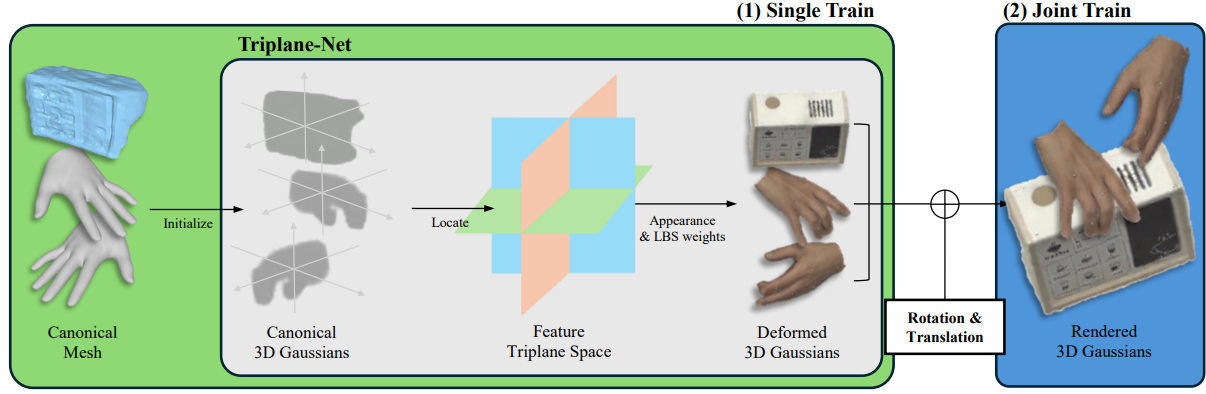

- BIGS: Bimanual Category-agnostic Interaction Reconstruction from Monocular Videos via 3D Gaussian Splatting

Jeongwan On, Kyeonghwan Gwak, Gunyoung Kang, Junuk Cha, Soohyun Hwang, Hyein Hwang, Seungryul Baek.

in Proc. of IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Nashville, USA, 2025.

[Paper] [Suppl.]

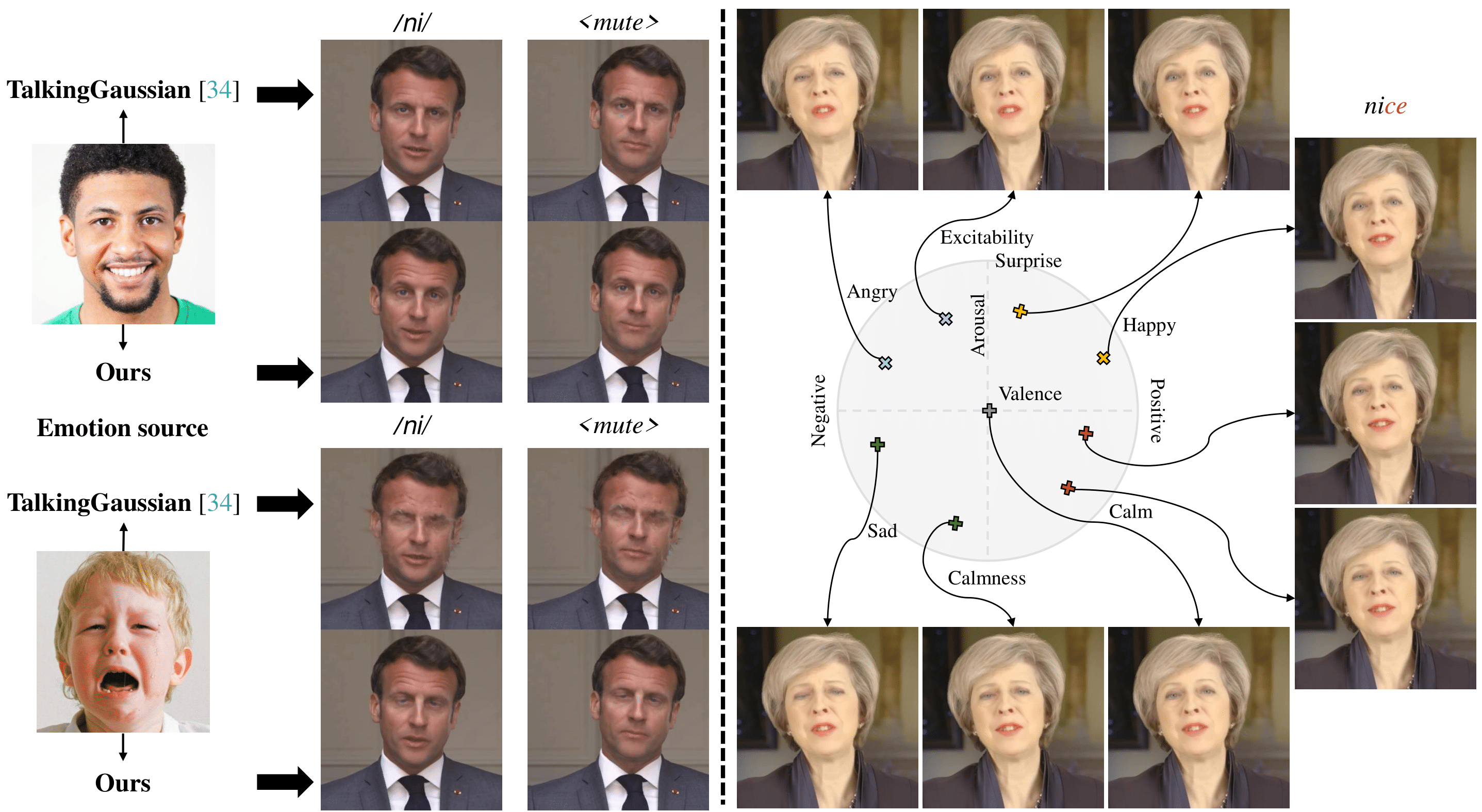

- EmoTalkingGaussian: Continuous Emotion‑conditioned Talking Head Synthesis

Junuk Cha, Seongro Yoon, Valeriya Strizhkova, Francois Bremond, Seungryul Baek.

in arXiv 2025.

[Paper]

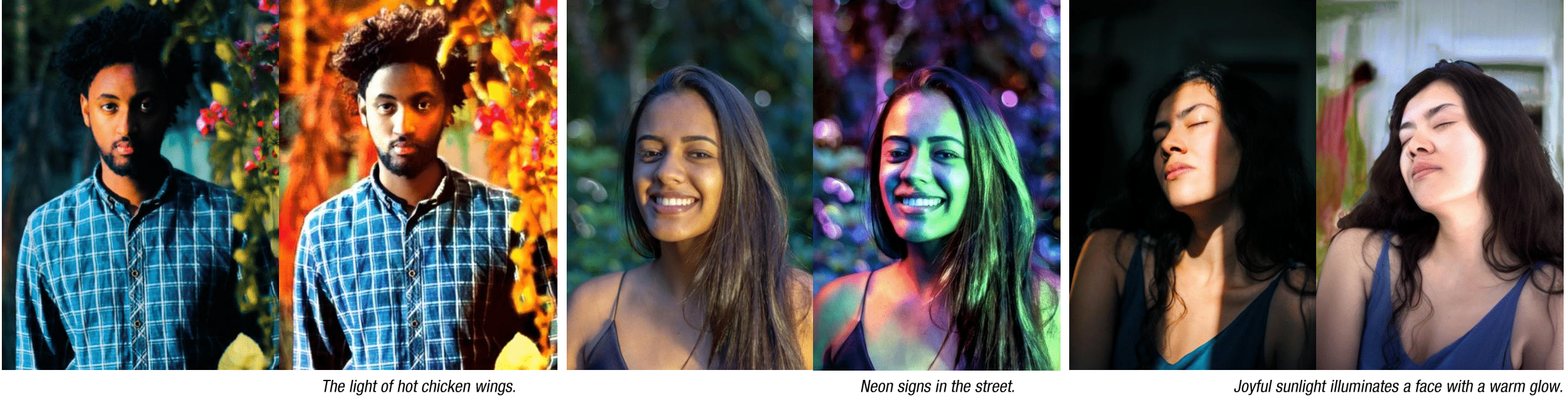

- Text2Relight: Creative Portrait Relighting with Text Guidance

Junuk Cha, Mengwei Ren, Krishna Kumar Singh, He Zhang, Yannick Hold-Geoffroy, Seunghyun Yoon, HyunJoon Jung, Jae Shin Yoon*, Seungryul Baek.*

in Proc. of Association for the Advancement of Artificial Intelligence (AAAI), Philadelphia, USA, 2025.

Co-last authors*.

[Paper (arxiv)]

- 3DGS-based Bimanual Category-agnostic Interaction Reconstruction

Jeongwan On, Kyeonghwan Gwak, Gunyoung Kang, Hyein Hwang, Soohyun Hwang, Junuk Cha, Jaewook Han, Seungryul Baek.

in Technical Report of European Conference on Computer Vision (ECCV), Milan, Italy, 2024.

We got 1st place at the bimanual category-agnostic interaction reconstruction challenge.

[Paper]

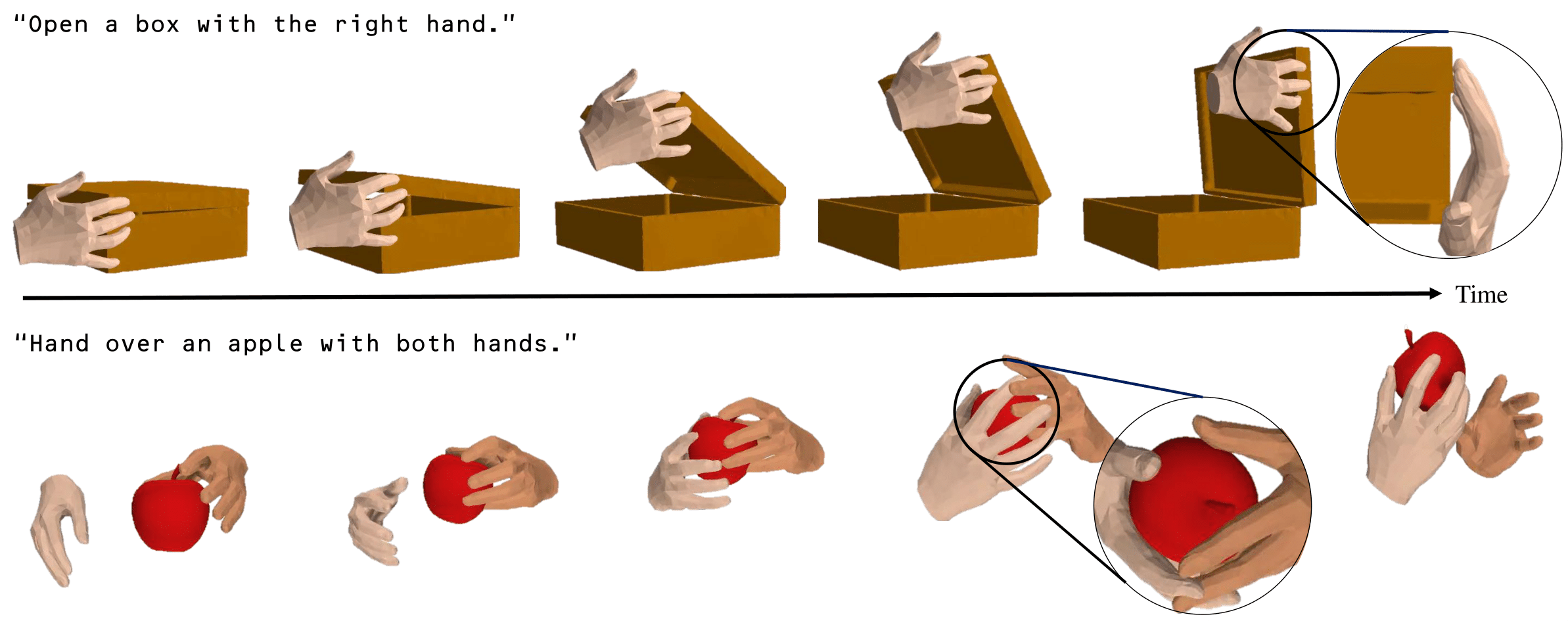

- Text2HOI: Text-guided 3D Motion Generation for Hand-Object Interaction

Junuk Cha, Jihyeon Kim, Jae Shin Yoon*, Seungryul Baek.*

in Proc. of IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Seattle, USA, 2024.

Co-last authors*.

[Paper][Supp][Arxiv][Project]

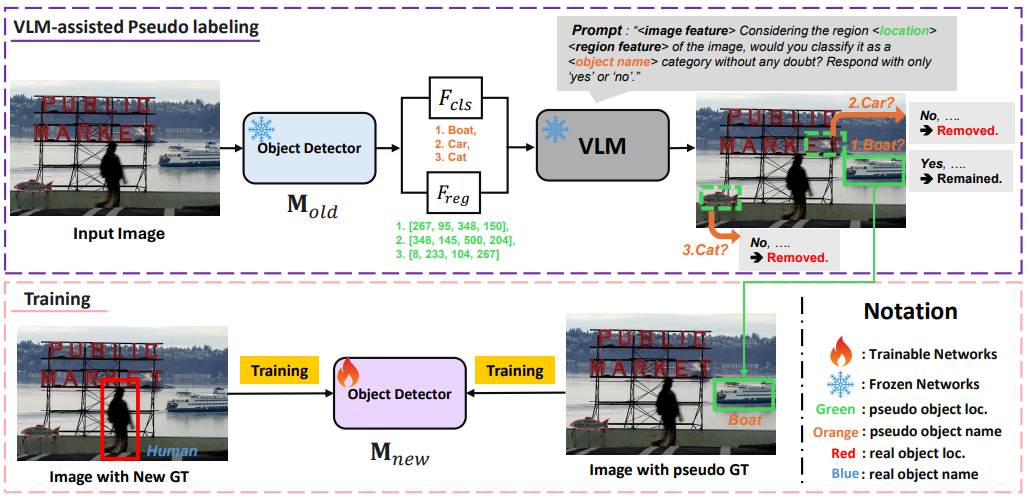

- VLM-PL: Advanced Pseudo Labeling Approach for Class Incremental Object Detection via Vision-Language Model

Junsu Kim, Yunhoe Ku*, Jihyeon Kim*, Junuk Cha, Seungryul Baek.

in Proc. of IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) workshop, Seattle, USA, 2024.

Equal contribution*.

[Paper][Arxiv]

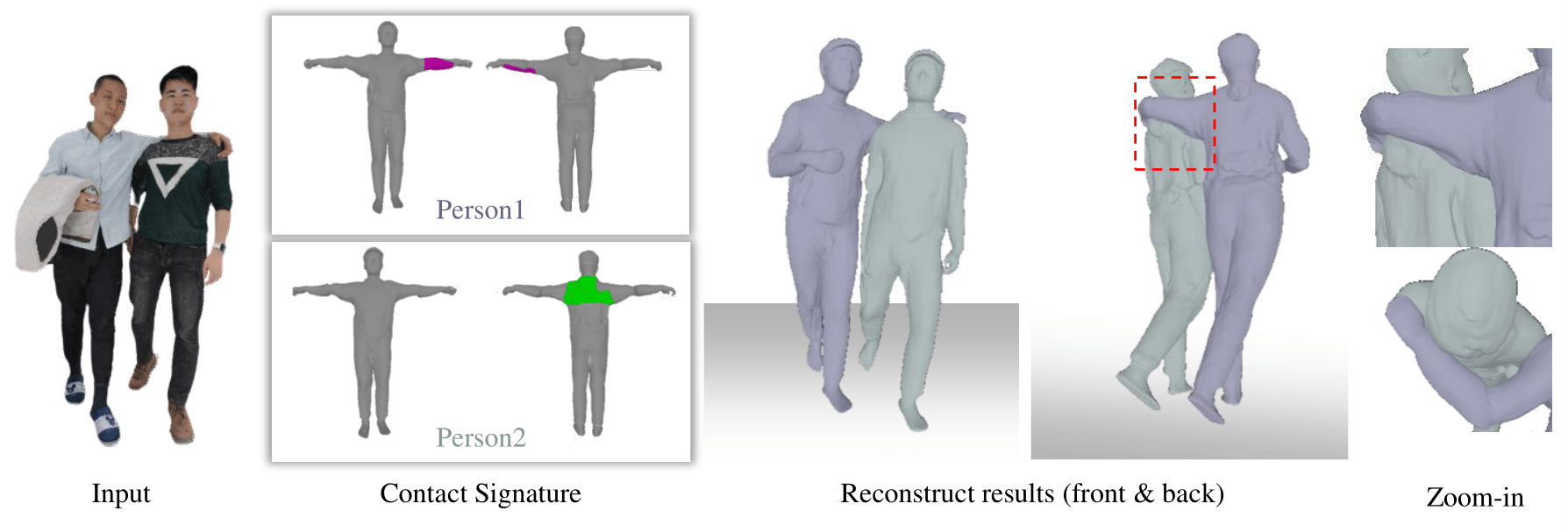

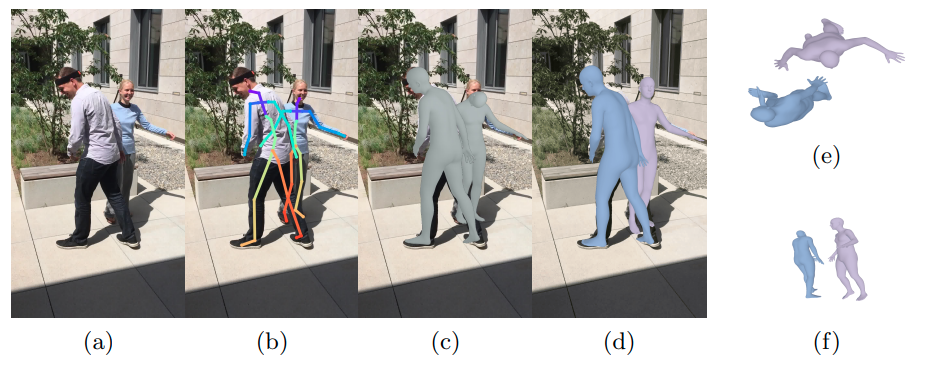

- 3D Reconstruction of Interacting Multi-Person in Clothing from a Single Image

Junuk Cha, Hansol Lee, Jaewon Kim, Bao Truong, Jae Shin Yoon*, Seungryul Baek.*.

in Proc. of IEEE Winter Conf. on Applications of Computer Vision (WACV), Waikoloa, USA, 2024.

Co-last authors*.

[PDF]

- Dynamic Appearance Modeling of Clothed 3D Human Avatars using a Single Camera

Hansol Lee, Junuk Cha, Yunhoe Ku, Jae Shin Yoon*, Seungryul Baek.*.

in arXiv 2023.

Co-last authors*.

[PDF]

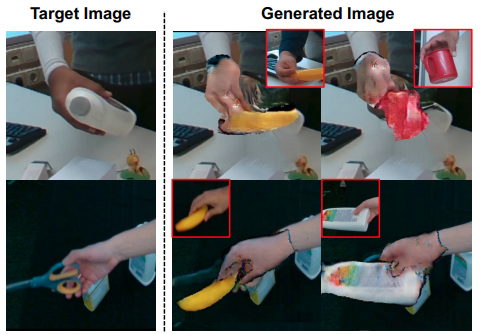

- HOReeNet: 3D-aware Hand-Object Grasping Reenactment

Changhwa Lee, Junuk Cha, Hansol Lee, Seongyeong Lee, Donguk Kim, Seungryul Baek.

in arXiv 2022.

[PDF]

- Multi-Person 3D Pose and Shape Estimation via Inverse Kinematics and Refinement

Junuk Cha, Muhammad Saqlain, GeonU Kim, Mingyu Shin, Seungryul Baek.

in Proc. of European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 2022.

[PDF][Code]

- Learning 3D Skeletal Representation From Transformer for Action Recognition

Junuk Cha, Muhammad Saqlain, Donguk Kim, Seungeun Lee, Seongyeong Lee, Seungryul Baek.

in IEEE Access 2022 (IF: 3.367).

[PDF]

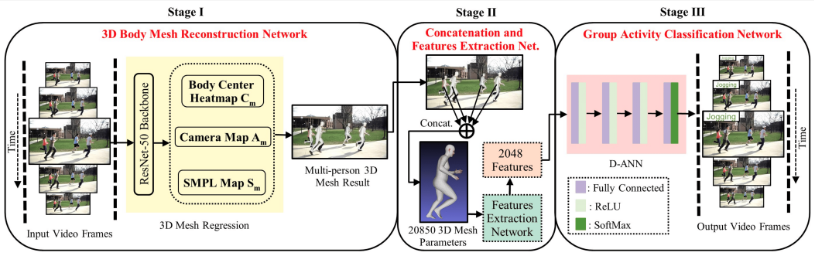

- 3DMesh-GAR: 3D human body mesh-based method for group activity recognition

Muhammad Saqlain, Donguk Kim, Junuk Cha, Changhwa Lee, Seongyeong Lee, Seungryul Baek.

in Sensors 2022 (IF:3.576).

[PDF]

- Towards Single 2D Image-level Self-supervision for 3D Human Pose and Shape Estimation

Junuk Cha, Muhammad Saqlain, Changhwa Lee, Seongyeong Lee, Seungeun Lee, Donguk Kim, Won-Hee Park, Seungryul Baek.

in Journal of Applied Sciences 2021 (IF:2.679).

[PDF][Code]

Awards

-

The 8th Workshop on Observing and Understanding Hands in conjunction with ECCV 2024, 1st place in HANDS workshop challenge

[Certificate] -

The 7th Workshop on Observing and Understanding Hands in conjunction with ICCV 2023, 3rd place in HANDS workshop challenge

[Certificate]

Academic Activities

- Program Committee (Reviewer) at CVPR 2025, 2026.

- Program Committee (Reviewer) at ICCV 2025.

- Program Committee (Reviewer) at ICLR 2025, 2026.

- Program Committee (Reviewer) at AAAI 2023, 2024, 2025, 2026.

- Program Committee (Reviewer) at NIPS 2024.

- Program Committee (Reviewer) at BMVC 2023, 2024, 2025.

Patents

- Dangerous Region Approaching Worker Detection and Stopping Machine Device Based on Thermal Images, 2023. Applicant : UNIST and Hyundai

Projects

- Development of vision recognition module for ARTG control, Ministry of Oceans and Fisheries, Jun. 2023 ~ Dec. 2023

- Detecting object and predicting multi agent’s future trajectory with velocity

- Development of an optimized human detection system algorithm for production factory environments, Hyundai, Jun. 2022 ~ Dec. 2023

- Detecting human segmentation masks and bounding boxes

- Improving performance for corner cases such as occluded people

Teaching Assistant & Mentoring

- Mentoring

- 2nd Ulju AI 4.0 Studio at UNIST, Ulsan, South Korea, Mar. 2022 ~ Nov. 2022.

- Mentored Kyeongmin Kim on non-line-of-sight perception task at AMI Lab, KAIST, Daejeon, South Korea, Dec. 2025 – Present.

- TA

- Navy AI professionals training process TA, Jinhae, South Korea, 12 Sep. 2023.

- 5th AI Novatus Academia PBL (Computer Vision part) at UNIST AI Innovation Park, Ulsan, South Korea, Mar. 2023 ~ Jun.2023.

- 1st Gyungnam AI Novatus Academia (Computer Vision part) at UNIST AI Innovation Park, Masan, South Korea, 15 Apr. 2022.

- 3rd AI Novatus Academia (Computer Vision part) at UNIST AI Innovation Park, Ulsan, South Korea, 25 Feb. 2022.

- Lecture TA

- 2023 Fall AI51601 Computer Vision.

- 2021 Fall ITP11701 Introduction to AI Programming 2.